This book isn’t just about robotics and war. It’s about a mega-shift in world power where power is accessible by individuals, or a failed state where children rule; where the uneducated and unsocialized can get their hands on mass destructibles—“losers” gaining control and power. A world where “any sufficiently advanced technology is indistinguishable from magic.” You quote inventor and futurist Ray Kurzweil: “It feels like all 10 billion of us are standing in a room up to our knees in flammable liquid waiting for someone, anyone, to light a match.”

The forms of government we have often become tied in with technology. It’s the gunpowder revolution that allows kingdoms and the rise of the state to happen versus city-states or dukedoms, linked with the idea of mass mobilization, which is how democracies ultimately triumphed in war. Well, what happens when you have another technological revolution? We’ve already seen so many different ways that the state is under siege today—the inability to control its borders, not just regarding immigration, but broader forces in globalization: global war, disease flow, and now the terrorism game. And so we may be seeing the end of two long-held monopolies. For the last 400 years the state was the dominant player in war and for the last 5,000 years war was something involving only human participation. Now we’ve seen these other entities come along and challenge the state, plus we’re seeing more and more machines being utilized in the fighting of war.

Robots are only as responsible as the person operating them, according to what you’ve written. When robots operate on AI, who takes the responsibility of the act? Couple that with the human need to blame someone, to have someone that must to take responsibility, to extract punishment, someone who must pay. What is the potential for robotics to change the face not only of law, but also of human behavior?

There’s a great scene in the book where Human Rights Watch staff argue over accountability, and start referencing not the Geneva Conventions, but the Star Trek Prime Directive. It illustrates how we’re grasping at straws to figure out right from wrong in this new world. When rules are being rewritten in relation to what’s possible with technology they also start to be rewritten on what is legally and ethically proper. How does this play in the war of ideas against radical groups? This idea that if a mistake happens because of the technology, there’s an assumption that we must have meant for it to happen because the US has lost the benefit of the doubt in a lot of countries around the world. It is hard for people to digest that the mistake was just a technologic error, that there was no human behind that accident. People won’t believe that. And yes, people want to place fault on someone. But where do you place fault when there’s not a distinct “this one decision is what made it happen,” but rather, “it was a series of decisions” or “it was no decision that made it happen.” If the system kills the wrong person whom do you hold responsible? The operator? Commander? Software programmer? No one has a really good answer. I try to identify certain pathways that can certainly be shored-up through the law—some sense of responsibility within the system. You can’t say, “Well, you know, I turned it on and then it did what it wanted.” No. You still hold responsibility for turning it on. I use the parallel of dog ownership. Even if a dog bites someone, the owner bears some responsibility if they helped set that chain of events into motion, such as if they trained the dog wrong, or they put the dog into a situation where it was likely to bite a kid. They can’t just say, “Well, dog did it, it’s not my fault.” People need to bear some responsibility for the things that happen with these systems, even if they get more and more technologically advanced. That’s the endpoint for me. The very few times that people talk about the ethics of robotics, they really only talk about it in the Isaac Asimov way—of the machines themselves. But the real ethical discussion needs to be of the people behind the machines.

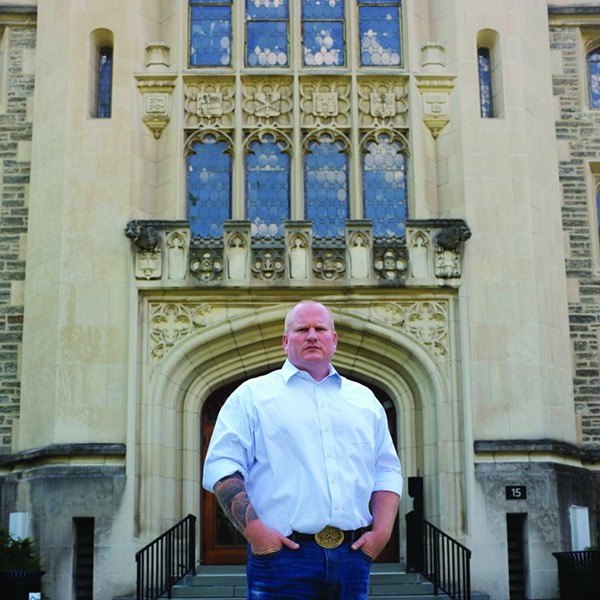

The Next Warrior

[]

Page 5 of 6